Exploring Technology: Innovations and tech advancements.

Title: Exploring Technology: Innovations and tech advancements.

Introduction

Technology shapes how we learn, work, travel, communicate, and solve complex problems. It is the quiet engine behind healthcare diagnostics, logistics networks, climate monitoring, and the tools we use daily. From the phones in our pockets to the sensors in smart buildings and the algorithms suggesting what we might read next, technology’s relevance lies in its ability to turn information into action. When used thoughtfully, it can increase opportunity, reduce waste, and open new creative frontiers. When misused or left ungoverned, it can strain privacy, widen inequality, and waste resources. This article explores today’s leading tech themes and offers a practical, human-centered roadmap for decision-makers and curious readers alike.

Outline

– The Invisible Infrastructure: Cloud, Edge, and Connectivity

– Machine Intelligence: Practical AI and Responsible Use

– Cyber Resilience: Security, Privacy, and Trust

– Sustainable Technology: Efficiency, Circularity, and Design

– A Pragmatic Roadmap: How to Plan, Pilot, and Scale (Conclusion)

The Invisible Infrastructure: Cloud, Edge, and Connectivity

Much of modern technology feels like magic because the essential parts are invisible. Applications load in seconds, photos sync across devices, and sensors whisper data from remote locations. This fluid experience is made possible by three converging layers: shared computing platforms (often called “the cloud”), localized processing close to where data is created (commonly known as the edge), and ever-faster connectivity. Together they determine how quickly insights flow and how reliably services operate.

Why combine these layers? Centralized platforms excel at elasticity—scaling up during peak demand and shrinking when quiet. Edge computing shines when milliseconds matter, such as in factory automation or autonomous systems, because it reduces the distance data must travel. Connectivity knits it all together. Newer mobile generations and fiber networks increase throughput and reduce latency, turning once-impossible use cases into everyday utilities. Analysts estimate that global data creation surpassed 120 zettabytes in the past year, a figure that underscores the need for architectures designed to move and process information efficiently.

Consider a retail chain with thousands of sensors tracking temperature, foot traffic, and shelf stock. Sending every data point to a distant data center is costly and slow. Instead, local gateways can filter noise, trigger immediate alerts (like a cooler out of range), and summarize the rest for centralized analysis. This hybrid approach lowers bandwidth costs and improves responsiveness. At the same time, centralized systems can train forecasting models and distribute updates back to local nodes. It’s a choreography: the core handles heavy thinking, the edge handles quick reflexes, and the network keeps them in sync.

When evaluating infrastructure strategies, teams often balance:

– Latency: What truly must respond in milliseconds?

– Bandwidth: What can be summarized locally to reduce traffic?

– Reliability: What happens if the network blips or a site goes offline?

– Cost: Are compute and storage allocated where they deliver the most value?

– Compliance: Are data locality and retention requirements respected?

Organizations that document these trade-offs and rehearse failure modes typically achieve higher uptime and smoother user experiences. The quiet magic of technology is no accident; it is the result of intentional design across cloud, edge, and connectivity layers that anticipate both everyday use and rare disruptions.

Machine Intelligence: Practical AI and Responsible Use

Machine intelligence has graduated from novelty to necessity. Pattern recognition models categorize images, detect anomalies in equipment, and extract structure from documents. Language models assist with drafting, summarizing, and search, while recommendation algorithms suggest content and products. Despite the variety, the goal is consistent: transform raw data into timely decisions that augment human judgment rather than replace it.

Practical value emerges where AI is embedded into workflows. In customer support, models triage routine questions and surface context for agents, reducing wait times. In finance operations, anomaly detection flags unusual transactions before they become losses. In maintenance, predictive models turn break-fix cycles into planned interventions, reducing downtime. Teams report measurable gains—shorter processing times, fewer errors, and improved satisfaction—when they combine well-scoped objectives with strong data hygiene and clear handoffs to humans.

Yet, capabilities must be matched with responsibility. Bias can creep in when historical data reflects skewed outcomes or underrepresented groups. Transparency becomes crucial: stakeholders need to understand how a prediction was made, and how to appeal it. Privacy is another pillar; models should minimize exposure of personally sensitive information and honor regional expectations for data rights. Rather than treat responsibility as a constraint, leading practitioners frame it as design input that improves robustness and trust.

To operationalize AI, many teams adopt simple guardrails:

– Purpose limitation: Define the specific decision each model supports.

– Data minimization: Use the smallest dataset that meets the objective.

– Evaluation discipline: Measure accuracy, false positives, and fairness across segments.

– Human-in-the-loop: Keep critical decisions reviewable and reversible.

– Change control: Version datasets and models to trace outcomes over time.

There is also a performance-cost trade-off. Larger models can be powerful but may be slower and more expensive. Smaller, task-specific models are often faster and easier to monitor. A common pattern is to use compact models at the edge for quick filters and dispatch complex queries to centralized services when necessary. The artistry lies in choosing the lightest approach that reliably solves the problem—much like selecting the right tool rather than the largest toolbox.

Cyber Resilience: Security, Privacy, and Trust

As our lives move online, resilience is as critical as innovation. Headlines tend to focus on breaches, but the broader story is about trust: how systems defend against misuse, protect personal information, and recover quickly from incidents. Security is not a product; it is a posture that combines technology, process, and culture.

Threats evolve rapidly. Attackers automate scans for vulnerabilities, craft targeted social engineering, and exploit third-party dependencies. Meanwhile, organizations maintain sprawling inventories of devices, applications, and data. Complexity is the adversary’s ally. Simplifying architectures, maintaining accurate asset inventories, and automating updates can shrink the attack surface and reduce the number of “unknown unknowns.”

Privacy is the twin of security. People want control over their information: what is collected, why, and for how long. Many regions now enforce comprehensive data rights, prompting organizations to codify retention schedules and consent practices. Interestingly, privacy-by-design often improves operational efficiency. When teams collect less data and delete it on schedule, they reduce storage costs and lower risk exposure.

Building resilience involves layered defenses and realistic drills:

– Identity and access: Enforce strong authentication and least privilege by role.

– Visibility: Continuously monitor logs and telemetry for anomalies.

– Segmentation: Isolate critical systems to limit blast radius.

– Backup and recovery: Test restores regularly, not just backups.

– Incident playbooks: Rehearse coordinated responses with communications plans.

Quantifying risk helps leaders prioritize. Downtime can cost thousands per minute in some industries, while regulatory penalties and reputational harm can linger. By assigning likelihood and impact scores to risks—and mapping controls to those risks—teams can justify investments in monitoring, training, and modernization. The result is not invincibility but graceful degradation: even when something goes wrong, services remain usable, data stays protected, and recovery is swift. Trust, once earned and nurtured, becomes a competitive advantage that compounds over time.

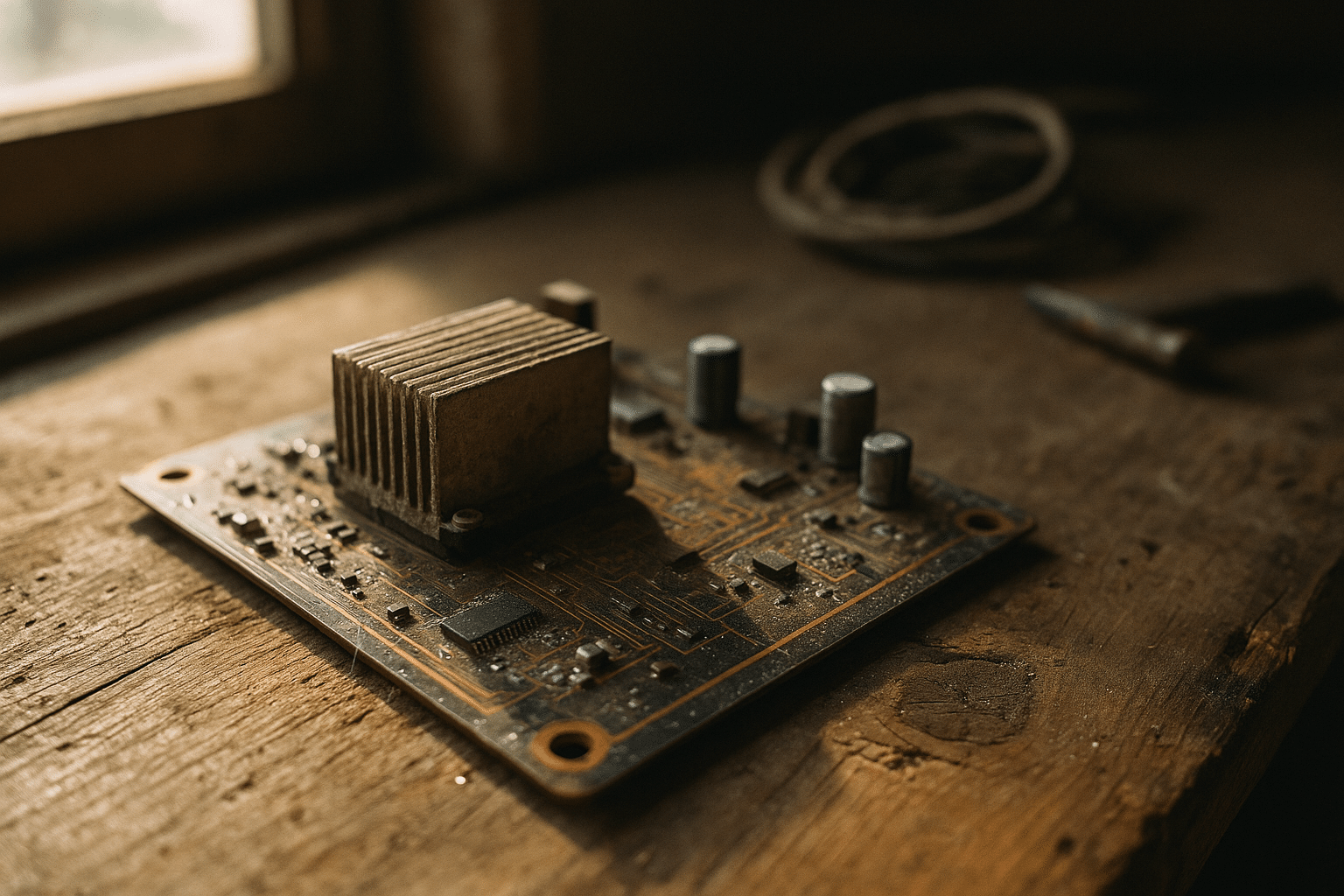

Sustainable Technology: Efficiency, Circularity, and Design

Technology’s footprint is physical: energy for computation, water for cooling, and materials for devices. Data centers consume an appreciable share of global electricity, estimated in the low single-digit percentages. Electronic waste exceeds 50 million metric tons each year worldwide. These figures are not reasons to halt innovation; they are invitations to design with restraint and responsibility.

Efficiency is the most immediate lever. Well-tuned applications can reduce compute cycles and storage needs, lowering both cost and emissions. Simple tactics—batching work, compressing media, and caching—deliver outsized gains. Choosing appropriate hardware, consolidating underutilized servers, and matching workloads to locations with cleaner grids can further reduce impact. Observability matters: when teams measure energy and resource usage, they often uncover quick wins that also improve performance.

Circularity addresses the full lifecycle. Devices should be designed for repair, upgrade, and safe recycling. Modular components make it easier to extend a device’s life by replacing parts instead of entire units. Procurement policies can encourage take-back programs and certified refurbishing partners. On the software side, extending support for older hardware keeps devices useful longer, reducing turnover and waste.

Sustainable design is also about user experience. Lightweight websites and apps reduce data transfer for everyone, especially where connectivity is constrained. Darker themes on devices with certain display types can reduce energy consumption. More broadly, thoughtful defaults—like opting out of unnecessary notifications—cut background processing and user distraction.

Practical steps many teams adopt include:

– Measure: Track energy per transaction, per user session, or per job.

– Optimize: Profile slow paths and reduce redundant data movement.

– Locate: Schedule heavy workloads during periods of cleaner grid intensity where feasible.

– Extend: Prioritize maintenance and repair over replacement.

– Educate: Share guidelines so developers and buyers can make informed choices.

Progress compounds. Small efficiency gains at the application level, multiplied by millions of users, translate into significant resource savings. Sustainable technology is not a separate project; it is an approach that aligns operational excellence with environmental stewardship.

A Pragmatic Roadmap: How to Plan, Pilot, and Scale (Conclusion)

Technology strategies succeed when they align with real needs. Instead of chasing trends, effective teams start with a portfolio of use cases ranked by impact and feasibility. The goal is not to implement every new idea but to cultivate a repeatable path from concept to value. Think of it as building a runway while taxiing: short, safe segments that cumulatively support larger ambitions.

A practical roadmap often follows four stages:

– Discover: Identify pain points and opportunities with clear metrics, such as cycle time, error rates, or energy per transaction.

– Design: Draft simple architectures that show data sources, decision points, and failure modes. Assign ownership early.

– Pilot: Run small experiments with narrow scope and short feedback loops. Success criteria should be objective and pre-defined.

– Scale: Standardize what works with documentation, templates, and training. Create playbooks so new teams can replicate results.

Throughout, governance provides guardrails without stifling creativity. Security, privacy, and sustainability reviews can be embedded in the lifecycle via lightweight checklists. Clear thresholds—when to involve legal, when to escalate a risk, when to archive data—keep momentum while honoring responsibilities. Humane change management acknowledges that new tools alter habits; training and support should accompany launches, not lag behind.

For leaders, the call to action is straightforward. Invest in foundational capabilities—observability, identity management, data quality—that pay dividends across projects. Encourage cross-functional teams so infrastructure, data, and product concerns are addressed together. Reward learning: celebrate pilots that fail fast and share lessons. These practices build confidence, reduce surprises, and accelerate time to value.

For practitioners and enthusiasts, the invitation is to stay curious but grounded. Learn the principles beneath the buzzwords: latency, throughput, model evaluation, lifecycle assessment. Build small things that matter, measure them honestly, and improve them steadily. Technology is not a sprint to a finish line; it is a craft shaped by constraints, ethics, and imagination. When we pair rigor with empathy—designing for real people in real conditions—we produce tools that are not only powerful but also trustworthy and sustainable. That is progress worth pursuing, one well-designed system at a time.