Emerging Technology Trends: Practical Innovations and What They Mean for You

Introduction

Technology evolves in waves that feel both familiar and disruptive. Familiar, because each cycle promises more capability at lower cost; disruptive, because every cycle rearranges how we work, connect, and compete. This article looks at emerging trends through a practical lens: what they are, where they help, where they fall short, and how to prepare. Instead of hype, you will find measured takeaways with examples you can apply in daily decisions—from adopting artificial intelligence in workflows to choosing greener devices and designing for privacy.

Outline

– A grounded look at everyday artificial intelligence: what it can do now, where it struggles, and how to measure value.

– Edge computing and the internet of things: why moving compute closer to data changes latency, reliability, and cost.

– Sustainable hardware and cloud efficiency: balancing performance with energy, materials, and lifecycle choices.

– Privacy-by-design and resilient security: rethinking trust for a data-saturated world.

– A practical adoption roadmap: skills, procurement, governance, and metrics that keep teams focused and realistic.

Everyday AI: From Novelty to Useful Co‑Pilot

Artificial intelligence has shifted from research labs to everyday tools. Text generation, code assistance, image classification, audio transcription, and predictive analytics are now accessible without specialized training. The core story is not about replacing people, but about augmenting routine tasks so that individuals can focus on judgment, relationships, and creativity. In controlled studies, AI assistance has shown notable gains for certain categories of work: drafts produced more quickly, routine coding steps accelerated, and summaries prepared in minutes rather than hours. Across multiple experiments reported by academics and industry groups, time-to-completion improvements of roughly 20–40 percent have appeared for structured tasks, with the largest gains often observed among less-experienced users. That does not make AI a magic wand; it makes it a practical co‑pilot for well-scoped activities.

To separate promise from practice, it helps to evaluate AI with the same rigor used for any tool. Consider three dimensions: quality, cost, and risk. Quality focuses on accuracy, relevance, and consistency. Cost includes both direct usage fees and hidden costs like rework, oversight, and performance overhead on devices. Risk spans privacy, security, and compliance exposure. A simple pilot can track baseline metrics—how long a task takes without AI, how long with AI, error rates in each case, and the review time required—then determine whether the net effect is positive. The goal is to “trust, but verify” with light-touch governance.

Where AI excels today is pattern-heavy work with abundant training examples. Examples include standard customer inquiries, classification of documents, extraction of key fields from forms, suggested code snippets, and initial drafts for reports. Where AI struggles is precise numerical reasoning, domain-specific edge cases without sufficient training data, and contexts that require up-to-the-minute knowledge beyond its latest updates. Misinterpretations can look confident but be incorrect, a failure mode sometimes described as hallucination. Well-designed workflows stage AI outputs as suggestions rather than final answers, making it easy to accept, edit, or reject. That separation maintains human accountability while benefiting from speed.

Practical implementation tips include a few recurring patterns:

– Keep humans in the loop for final approvals in high-stakes processes.

– Use retrieval techniques to ground AI answers in your verified documents.

– Maintain small, task-specific models or prompts to handle well-defined jobs.

– Log prompts and outputs for quality audits, while respecting privacy rules.

– Start with low-risk internal use cases, then expand step by step.

A simple rule-of-thumb: if the downside of a wrong answer is large, require evidence and human sign‑off; if the downside is small, let AI operate more autonomously with monitoring. Used this way, AI becomes a calm assistant rather than a dramatic disruptor—speeding up routine tasks while leaving judgement and nuance to people.

Edge Computing and IoT: Putting Compute Where the Data Lives

Data volumes are growing faster at the edges of networks than in centralized locations. Cameras, sensors, point-of-sale terminals, industrial instruments, and vehicles produce streams that exceed convenient bandwidth. Edge computing addresses this by moving compute and storage closer to the source—on gateways, embedded modules, or on-premise micro-servers—so you send only what matters, when it matters. The most immediate benefits are latency, resilience, and bandwidth efficiency. In applications like vision-based defect detection, local inference can reduce latency from hundreds of milliseconds to often under a few dozen milliseconds, which matters for safety and real-time control. For bandwidth, pre-processing video at the edge (for example, extracting events rather than streaming raw footage) can reduce uplink traffic dramatically; reductions of 80–95 percent are commonly reported in field deployments when only metadata or flagged segments are transmitted.

Reliability is another driver. If a retail checkout lane or factory cell depends entirely on a distant cloud, an outage or network congestion can halt operations. A tiered architecture—edge first, cloud next—lets local systems keep running and sync asynchronously when connections stabilize. Comparisons are straightforward:

– Cloud-only: simple to deploy, centralized control, but sensitive to network conditions and potentially higher ongoing bandwidth costs.

– Edge-first: lower latency and bandwidth, continued operation during connectivity issues, but more distributed assets to manage.

– Hybrid: a pragmatic middle ground where the edge handles time-critical work and the cloud handles aggregation, analytics, and fleet updates.

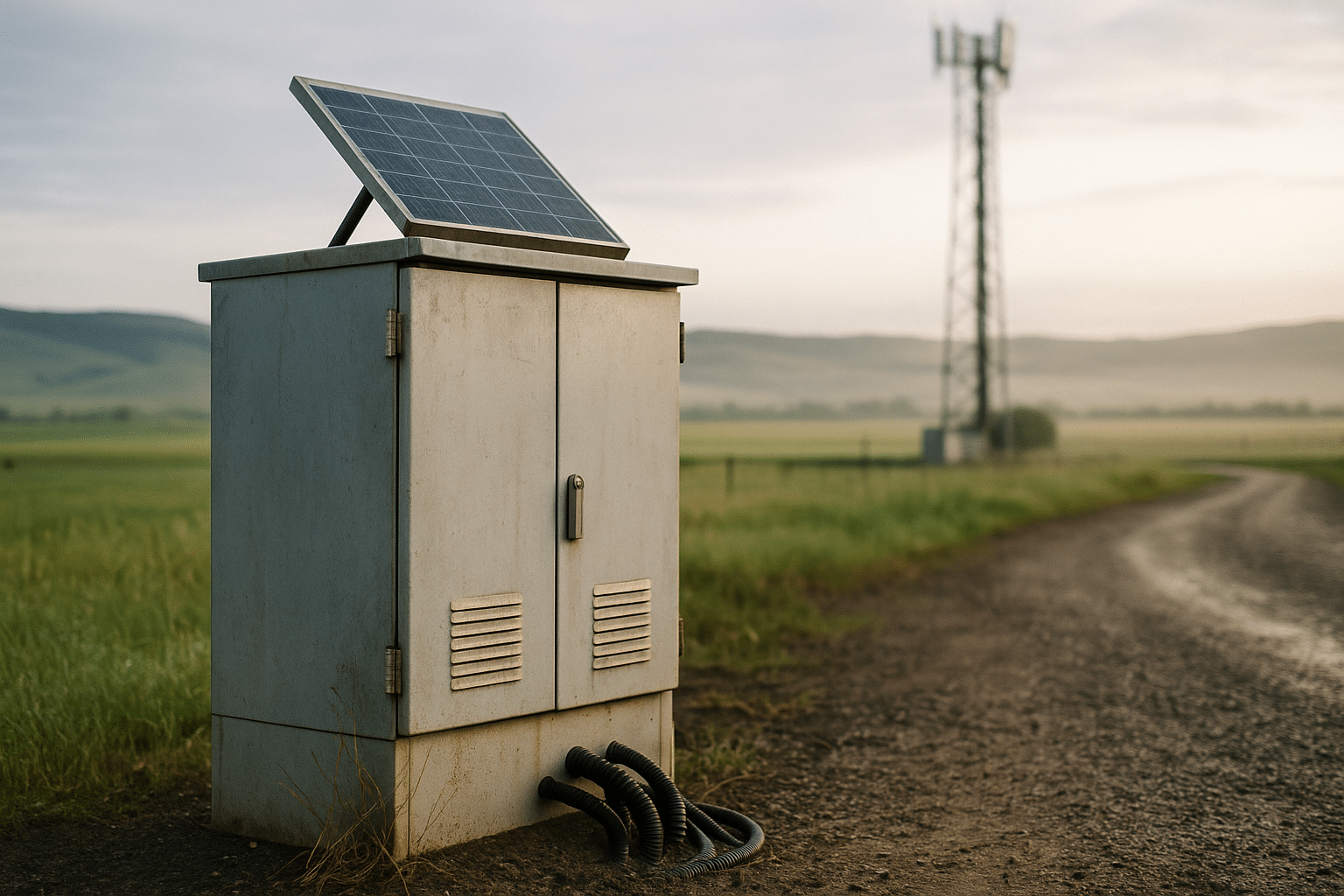

Designing an edge solution introduces new considerations. Physical environments can be harsh: temperature fluctuations, dust, vibration, and limited power. Hardware selection should match the duty cycle and conditions: fanless systems for dusty locations, ruggedized enclosures for vibration, and power-aware chips for solar or battery setups. Security expands beyond passwords; device identity, secure boot, signed updates, and remote attestation keep nodes trustworthy. Observability also shifts: you need to know which devices are online, which models they run, how they perform, and how they drift over time. Lightweight telemetry at the edge—with rollups to a central console—helps teams track health without saturating the network.

Adoption patterns often begin with a single use case that has measurable value. For instance, a logistics site might add edge analytics to detect blocked loading zones or count forklifts by zone. A farm might process soil moisture and weather data locally to drive irrigation in near-real-time. A clinic may pre-process imaging data to triage cases faster while keeping sensitive raw data on premises. Once the first win is validated, the same platform can host new use cases with incremental cost. The key is to start small, select robust hardware and maintainable software, and design for secure updates from day one.

Sustainable Compute: Efficiency, Lifecycle, and Measurable Impact

Technology’s environmental footprint spans energy use, embodied carbon in manufacturing, and end-of-life e‑waste. Decisions made by buyers and builders influence all three. On the energy side, two concepts matter: workload efficiency and facility efficiency. Workload efficiency measures how well code uses compute; optimizing algorithms, right-sizing models, and scheduling workloads intelligently can reduce energy draw substantially. Facility efficiency is often summarized by power usage effectiveness (PUE), the ratio of total facility power to IT equipment power. Modern, well-optimized data centers report PUE values near 1.2 under typical conditions, while less efficient facilities can exceed 2.0. That spread means the same workload might require significantly different total energy depending on where it runs.

Embodied impact—what it cost the planet to create the device—depends on materials, manufacturing, and logistics. Extending the useful life of equipment, when performance allows, can be among the most impactful choices. A balanced approach includes testing whether upgraded software or targeted component replacements (like storage or memory) can meet needs without full replacement. When new hardware is justified, energy-proportional designs and efficient accelerators can provide more work per watt. For AI specifically, training large models can consume notable energy; published estimates suggest ranges from hundreds to thousands of megawatt-hours for frontier-scale runs, with emissions varying widely based on the energy mix. That reality favors reuse of trained models, fine-tuning on smaller datasets, and careful scheduling in regions with cleaner grids.

Responsible end-of-life handling matters as well. Global e‑waste has been estimated in the tens of millions of metric tons per year, and recovery rates for valuable materials remain uneven. Clear processes help:

– Maintain accurate asset inventories with purchase dates and specifications.

– Wipe data securely and verifiably before devices leave your control.

– Partner with certified recyclers who provide chain-of-custody documentation.

– Prefer modular designs and repairable components where feasible.

Procurement can embed sustainability without sacrificing performance. Practical tactics include selecting devices with published energy ratings, verifying thermal designs that allow components to run efficiently, and choosing form factors that match the deployment environment. On the cloud side, look for transparency: regional energy mixes, carbon accounting that includes scope 2 and (when available) scope 3 elements, and workload placement tools that surface greener choices. To keep priorities clear, teams can track two or three metrics alongside cost and performance:

– Work per watt (or per dollar): throughput per unit energy or cost.

– Carbon intensity of compute hours: grams of CO2e per kWh in the selected region.

– Device lifespan: planned years of service and actual realized years.

The goal is not perfection; it is steady improvement. By treating efficiency and lifecycle as first-class requirements, organizations often find they can lower operating expenses while reducing impact—a practical win for budgets and the environment.

Privacy‑by‑Design and Trust: Security as a Product Feature

As data flows through phones, laptops, sensors, and cloud services, privacy and security can no longer be bolted on at the end. They influence adoption, retention, and brand trust. Privacy-by-design means making deliberate choices that minimize the data collected, limit who can see it, and reduce how long it is kept. Concretely, that can look like processing sensitive data on-device when possible, sending only derived signals rather than raw content, and encrypting data in transit and at rest. For analytics, techniques such as pseudonymization, aggregation, and rate-limited queries help protect individuals while preserving utility.

Security is similarly evolving from perimeter defense to continuous verification. A zero-trust approach assumes networks are porous and identities can be impersonated, so each request must be verified in context. This involves strong authentication, least-privilege access, segmentation, and detailed logging. For edge deployments, secure boot and signed firmware protect against tampering. For AI systems, model and data governance address risks from training data contamination, prompt injection, and leakage of sensitive information. A practical governance checklist might include:

– Data inventories that track origins, consent, and retention periods.

– Model cards or documentation that describe intended use and known limitations.

– Human review policies for high-impact decisions, with clear escalation paths.

– Incident playbooks that define roles, communications, and recovery steps.

Legal and regulatory landscapes also shape design choices. Many jurisdictions require transparency about data collection and provide rights like access and deletion. Even if certain rules do not apply to a given team today, aligning with their spirit sets a strong baseline. Privacy notices written in plain language, consent flows that are meaningful rather than perfunctory, and data portability features promote goodwill and reduce future migration costs.

From a business perspective, investments in privacy and security pay off by reducing breach likelihood, limiting the blast radius of incidents, and building user confidence. They also enable new products: if customers trust a platform to handle sensitive data responsibly, they are more willing to share the information that makes personalization and analytics useful. The right posture is measured and pragmatic: protect high-value assets rigorously, make defaults safe, and keep controls simple enough that people will use them correctly under pressure. Over time, security becomes not just a shield, but an enabler of reliable, trusted experiences.

A Practical Roadmap: Skills, Procurement, and Measurable Outcomes

Turning trends into outcomes requires intentional steps. Start with skills. A shared baseline across roles reduces friction: non-technical teams benefit from light AI literacy, data basics, and an understanding of privacy expectations; technical teams deepen proficiency in model lifecycle, edge deployment patterns, and secure coding. Short workshops and internal playbooks go a long way, especially when paired with realistic examples from the organization’s own workflows. Encourage a culture of small experiments—each with a clear hypothesis, a metric, and a short timebox—so that learning compounds without large sunk costs.

Procurement and architecture decisions shape costs and flexibility for years. To avoid lock-in without sacrificing momentum, favor open standards and modular components where practical. For AI, evaluate whether task-specific smaller models suffice before reaching for larger, more resource-intensive alternatives. For edge, verify environmental tolerances and support lifecycles. In both cases, plan for observability and updates from the start. A decision record template helps keep choices transparent:

– Purpose: the specific problem the purchase solves.

– Alternatives: at least two approaches considered, with trade-offs.

– Metrics: success criteria that include performance, cost, and risk.

– Lifecycle: expected lifespan, update policy, and end-of-life plan.

Governance should be right-sized. Heavy processes can stall progress; no process invites chaos. A light steering group can review pilots monthly, watch for overlapping efforts, and ensure privacy and security standards are applied consistently. Financially, complement total cost of ownership with value metrics: reduced cycle time, error-rate reductions, improved customer satisfaction, fewer incidents, or lower energy use. Translating these into annualized savings or capacity gains makes comparisons concrete and avoids the trap of adopting technology for its own sake.

Finally, communicate outcomes in plain language. Dashboards and documents should show what changed, by how much, and what remains to be improved. Celebrate small wins that come from disciplined iteration. Over time, this approach builds a portfolio of capabilities—AI assistance in routine tasks, resilient edge operations where latency matters, greener infrastructure choices, and a privacy posture that earns trust. The cumulative effect is a technology foundation that is flexible, cost-aware, and aligned with real needs.

Conclusion: For Decision‑Makers Who Want Progress Without the Hype

Emerging technologies can feel overwhelming, but progress favors teams that move in measured steps. Focus on problems that matter, pick approaches that fit your constraints, and validate results with data. Use AI as a co‑pilot, not an oracle. Put compute where it makes sense—often closer to the data—while keeping an eye on energy and lifecycle impact. Treat privacy and security as features that earn trust. And above all, develop people and processes alongside tools. With that discipline, the next wave of innovation becomes an opportunity to deliver reliable, responsible improvements—one practical project at a time.